Tutorial IV: Using Runner Advanced II¶

The purpose of this tutorial is to learn more about Runner’s advanced features and advanced visualization options. The usecase and the data are the same.

Using code from the previous tutorial:

[1]:

%%capture --no-stdout

import warnings

from abc import ABC, abstractmethod

import matplotlib.pyplot as plt

import numpy as np

from hdbscan import HDBSCAN

from numba.errors import NumbaWarning

from numpy.random import RandomState

from sklearn.cluster import KMeans

from sklearn.metrics import adjusted_rand_score, adjusted_mutual_info_score

from dpemu import runner

from dpemu.dataset_utils import load_digits_

from dpemu.filters.common import Missing

from dpemu.ml_utils import reduce_dimensions

from dpemu.nodes import Array

from dpemu.plotting_utils import visualize_best_model_params, visualize_scores, print_results_by_model

warnings.simplefilter("ignore", category=NumbaWarning)

def get_data():

return load_digits_()

def get_err_root_node():

err_root_node = Array()

err_root_node.addfilter(Missing("probability", "missing_value"))

return err_root_node

def get_err_params_list():

p_steps = np.linspace(0, .5, num=6)

err_params_list = [{"probability": p, "missing_value": 0} for p in p_steps]

return err_params_list

class Preprocessor:

def __init__(self):

self.random_state = RandomState(42)

def run(self, _, data, params):

reduced_data = reduce_dimensions(data, self.random_state)

return None, reduced_data, {"reduced_data": reduced_data}

class AbstractModel(ABC):

def __init__(self):

self.random_state = RandomState(42)

@abstractmethod

def get_fitted_model(self, data, params):

pass

def run(self, _, data, params):

labels = params["labels"]

fitted_model = self.get_fitted_model(data, params)

return {

"AMI": round(adjusted_mutual_info_score(labels, fitted_model.labels_, average_method="arithmetic"), 3),

"ARI": round(adjusted_rand_score(labels, fitted_model.labels_), 3),

}

class KMeansModel(AbstractModel):

def __init__(self):

super().__init__()

def get_fitted_model(self, data, params):

labels = params["labels"]

n_classes = len(np.unique(labels))

return KMeans(n_clusters=n_classes, random_state=self.random_state).fit(data)

class HDBSCANModel(AbstractModel):

def __init__(self):

super().__init__()

def get_fitted_model(self, data, params):

return HDBSCAN(

min_samples=params["min_samples"],

min_cluster_size=params["min_cluster_size"],

core_dist_n_jobs=1

).fit(data)

def main():

data, labels, label_names, dataset_name = get_data()

df = runner.run(

train_data=None,

test_data=data,

preproc=Preprocessor,

preproc_params=None,

err_root_node=get_err_root_node(),

err_params_list=get_err_params_list(),

model_params_dict_list=get_model_params_dict_list(labels),

)

print_results_by_model(df, ["missing_value_id", "labels", "reduced_data"])

visualize(df, label_names, dataset_name, data)

Let’s redo the step where we defined the hyperparameters used by our models. In the previous tutorial, we learned that Runner runs every model in the list defined by this function with all different sets of hyperparameters listed in the corresponding params_list -element. Now if we add more sets of hyperparameters to our only HDBSCAN’s param_list, all these results will be listed under “HDBSCAN #1” in the resulting Dataframe. Using this information some of our visualizers are able to visualize hyperparameter-optimized results. Now we are also testing few different values for HDBSCAN’s min_samples. Larger min_samples just means that more datapoints will be seen as noise.

[2]:

def get_model_params_dict_list(labels):

min_cluster_size_steps = [25, 50, 75]

min_samples_steps = [1, 10]

return [

{"model": KMeansModel, "params_list": [{"labels": labels}]},

{"model": HDBSCANModel, "params_list": [{

"min_cluster_size": min_cluster_size,

"min_samples": min_samples,

"labels": labels

} for min_cluster_size in min_cluster_size_steps for min_samples in min_samples_steps]},

]

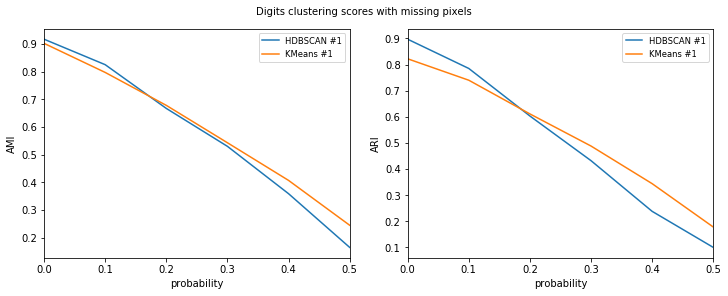

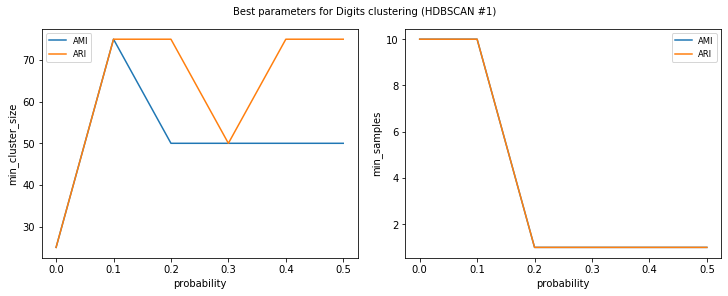

Let’s also partially redo our visualizations. First we would like to visualize the hyperparameter-optimized scores for each of the models. Secondly we would like to see the best hyperparameters for HDBSCAN given the error.

[3]:

def visualize(df, label_names, dataset_name, data):

visualize_scores(

df,

score_names=["AMI", "ARI"],

is_higher_score_better=[True, True],

err_param_name="probability",

title=f"{dataset_name} clustering scores with missing pixels",

)

visualize_best_model_params(

df,

model_name="HDBSCAN",

model_params=["min_cluster_size", "min_samples"],

score_names=["AMI", "ARI"],

is_higher_score_better=[True, True],

err_param_name="probability",

title=f"Best parameters for {dataset_name} clustering"

)

plt.show()

Let’s check out the results. Scores for HDBSCAN seem slightly better and smaller min_samples seems to be a better fit for data with lots of error.

[4]:

main()

100%|██████████| 6/6 [00:54<00:00, 10.64s/it]

HDBSCAN #1

KMeans #1

| AMI | ARI | missing_value | probability | min_cluster_size | min_samples | time_err | time_pre | time_mod | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | 0.869 | 0.810 | 0 | 0.0 | 25.0 | 1.0 | 0.005 | 34.081 | 0.072 |

| 1 | 0.917 | 0.897 | 0 | 0.0 | 25.0 | 10.0 | 0.005 | 34.081 | 0.079 |

| 2 | 0.908 | 0.883 | 0 | 0.0 | 50.0 | 1.0 | 0.005 | 34.081 | 0.069 |

| 3 | 0.907 | 0.883 | 0 | 0.0 | 50.0 | 10.0 | 0.005 | 34.081 | 0.075 |

| 4 | 0.908 | 0.883 | 0 | 0.0 | 75.0 | 1.0 | 0.005 | 34.081 | 0.067 |

| 5 | 0.907 | 0.883 | 0 | 0.0 | 75.0 | 10.0 | 0.005 | 34.081 | 0.075 |

| 6 | 0.815 | 0.766 | 0 | 0.1 | 25.0 | 1.0 | 0.014 | 34.300 | 0.074 |

| 7 | 0.816 | 0.763 | 0 | 0.1 | 25.0 | 10.0 | 0.014 | 34.300 | 0.074 |

| 8 | 0.821 | 0.779 | 0 | 0.1 | 50.0 | 1.0 | 0.014 | 34.300 | 0.310 |

| 9 | 0.807 | 0.751 | 0 | 0.1 | 50.0 | 10.0 | 0.014 | 34.300 | 0.214 |

| 10 | 0.815 | 0.755 | 0 | 0.1 | 75.0 | 1.0 | 0.014 | 34.300 | 0.160 |

| 11 | 0.825 | 0.785 | 0 | 0.1 | 75.0 | 10.0 | 0.014 | 34.300 | 0.169 |

| 12 | 0.619 | 0.465 | 0 | 0.2 | 25.0 | 1.0 | 0.006 | 35.808 | 0.092 |

| 13 | 0.630 | 0.518 | 0 | 0.2 | 25.0 | 10.0 | 0.006 | 35.808 | 0.080 |

| 14 | 0.667 | 0.597 | 0 | 0.2 | 50.0 | 1.0 | 0.006 | 35.808 | 0.085 |

| 15 | 0.634 | 0.524 | 0 | 0.2 | 50.0 | 10.0 | 0.006 | 35.808 | 0.130 |

| 16 | 0.667 | 0.603 | 0 | 0.2 | 75.0 | 1.0 | 0.006 | 35.808 | 0.088 |

| 17 | 0.631 | 0.509 | 0 | 0.2 | 75.0 | 10.0 | 0.006 | 35.808 | 0.076 |

| 18 | 0.525 | 0.422 | 0 | 0.3 | 25.0 | 1.0 | 0.015 | 35.284 | 0.237 |

| 19 | 0.526 | 0.389 | 0 | 0.3 | 25.0 | 10.0 | 0.015 | 35.284 | 0.276 |

| 20 | 0.530 | 0.432 | 0 | 0.3 | 50.0 | 1.0 | 0.015 | 35.284 | 0.164 |

| 21 | 0.524 | 0.390 | 0 | 0.3 | 50.0 | 10.0 | 0.015 | 35.284 | 0.084 |

| 22 | 0.530 | 0.432 | 0 | 0.3 | 75.0 | 1.0 | 0.015 | 35.284 | 0.145 |

| 23 | 0.524 | 0.390 | 0 | 0.3 | 75.0 | 10.0 | 0.015 | 35.284 | 0.180 |

| 24 | 0.348 | 0.195 | 0 | 0.4 | 25.0 | 1.0 | 0.007 | 17.956 | 0.048 |

| 25 | 0.196 | 0.061 | 0 | 0.4 | 25.0 | 10.0 | 0.007 | 17.956 | 0.052 |

| 26 | 0.359 | 0.237 | 0 | 0.4 | 50.0 | 1.0 | 0.007 | 17.956 | 0.043 |

| 27 | 0.196 | 0.061 | 0 | 0.4 | 50.0 | 10.0 | 0.007 | 17.956 | 0.054 |

| 28 | 0.359 | 0.238 | 0 | 0.4 | 75.0 | 1.0 | 0.007 | 17.956 | 0.042 |

| 29 | 0.196 | 0.061 | 0 | 0.4 | 75.0 | 10.0 | 0.007 | 17.956 | 0.049 |

| 30 | 0.017 | 0.000 | 0 | 0.5 | 25.0 | 1.0 | 0.012 | 17.873 | 0.093 |

| 31 | 0.018 | 0.002 | 0 | 0.5 | 25.0 | 10.0 | 0.012 | 17.873 | 0.055 |

| 32 | 0.165 | 0.073 | 0 | 0.5 | 50.0 | 1.0 | 0.012 | 17.873 | 0.041 |

| 33 | 0.084 | 0.021 | 0 | 0.5 | 50.0 | 10.0 | 0.012 | 17.873 | 0.050 |

| 34 | 0.163 | 0.100 | 0 | 0.5 | 75.0 | 1.0 | 0.012 | 17.873 | 0.039 |

| 35 | 0.040 | 0.014 | 0 | 0.5 | 75.0 | 10.0 | 0.012 | 17.873 | 0.050 |

| AMI | ARI | missing_value | probability | time_err | time_pre | time_mod | |

|---|---|---|---|---|---|---|---|

| 0 | 0.902 | 0.822 | 0 | 0.0 | 0.005 | 34.081 | 0.115 |

| 1 | 0.797 | 0.740 | 0 | 0.1 | 0.014 | 34.300 | 0.149 |

| 2 | 0.678 | 0.611 | 0 | 0.2 | 0.006 | 35.808 | 0.647 |

| 3 | 0.543 | 0.488 | 0 | 0.3 | 0.015 | 35.284 | 0.484 |

| 4 | 0.407 | 0.344 | 0 | 0.4 | 0.007 | 17.956 | 0.224 |

| 5 | 0.245 | 0.178 | 0 | 0.5 | 0.012 | 17.873 | 0.477 |

The notebook for this tutorial can be found here.